Health spending starts to rise but remains weak in Europe, says OECD

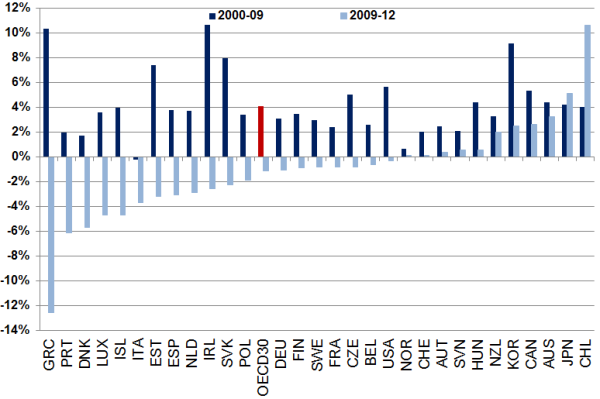

| In Europe, health spending continued to fall in 2012 in Greece,

Italy, Portugal and Spain, as well as in the Czech Republic and Hungary.

In Greece, health spending in real terms was 25% lower in 2012 than in

2009, primarily driven by cuts in public spending. By contrast, outside Europe, Chile and Mexico saw strong growth in health spending in 2012, at 6.5% and 8.5% respectively, largely due to further efforts towards universal coverage and access to healthcare. Health spending in Korea has continued to grow at an annual rate of 6% since 2009, mainly driven by increases in private spending. In the United States, health spending grew by 2.1% in 2012, above the OECD average but similar to growth rates in 2010 and 2011. |

|

Continued reductions in pharmaceutical spending

While

spending on hospital and outpatient care grew in many countries in 2012,

almost two-thirds of OECD countries have experienced real falls in

pharmaceutical spending since 2009. Reductions have been driven by price

cuts, often through negotiations with manufacturers, and a growing

share of the generic market. This share has increased due to patent

expirations for a number of high-volume and high-cost brand name drugs,

and policies to promote the use of cheaper generic drugs.The

share of the generic market grew on average by 20% between 2008 and

2012 to reach 24% of the total pharmaceutical expenditure. The increase

was particularly steep in Spain (+ 100%), France (+60%), Denmark (+44%)

and the United Kingdom (+28%).

Annual growth in pharmaceutical spending, in real terms

These are some of the recent trends shown in OECD Health Statistics 2014, the most comprehensive source of comparable statistics on health and health systems across the 34 OECD countries. Covering the period 1960 to 2013, this interactive database can be used for comparative analyses on health status, risk factors to health, health care resources and utilisation, and health expenditure and financing.

Country notes are available for all 34 OECD countries at www.oecd.org/health/healthdata. This website also includes an excel file with 50 key indicators.

OECD Health Statistics 2014 is available in OECD.Stat, the statistics portal for all OECD databases.

An embedable data visualisation for this publication is available at: www.compareyourcountry.org/health.

Please use the ‘+share/embed’ button to customize this tool for your country and language and to generate the embed code for your website.

For further information about the content, please contact Francesca Colombo (tel. + 33 1 45 24 93 60), Gaétan Lafortune (tel. +33 1 45 24 92 67) or David Morgan (tel. +33 1 45 24 76 09) in the OECD Health Division.

Please use the ‘+share/embed’ button to customize this tool for your country and language and to generate the embed code for your website.

For further information about the content, please contact Francesca Colombo (tel. + 33 1 45 24 93 60), Gaétan Lafortune (tel. +33 1 45 24 92 67) or David Morgan (tel. +33 1 45 24 76 09) in the OECD Health Division.